Stable Diffusion Inpainting example with Hugging Face inference Endpoints

Inpainting refers to the process of replacing/deteriorating or filling in missing data in an artwork to complete the image. This process was commonly used in image restoration. But with the latest AI developments and breakthroughs, like DALL-E by OpenAI or Stable Diffusion by CompVis, Stability AI, and LAION, inpainting can be used with generative models and achieve impressive results.

You can try it out yourself at the RunwayML Stable Diffusion Inpainting Space

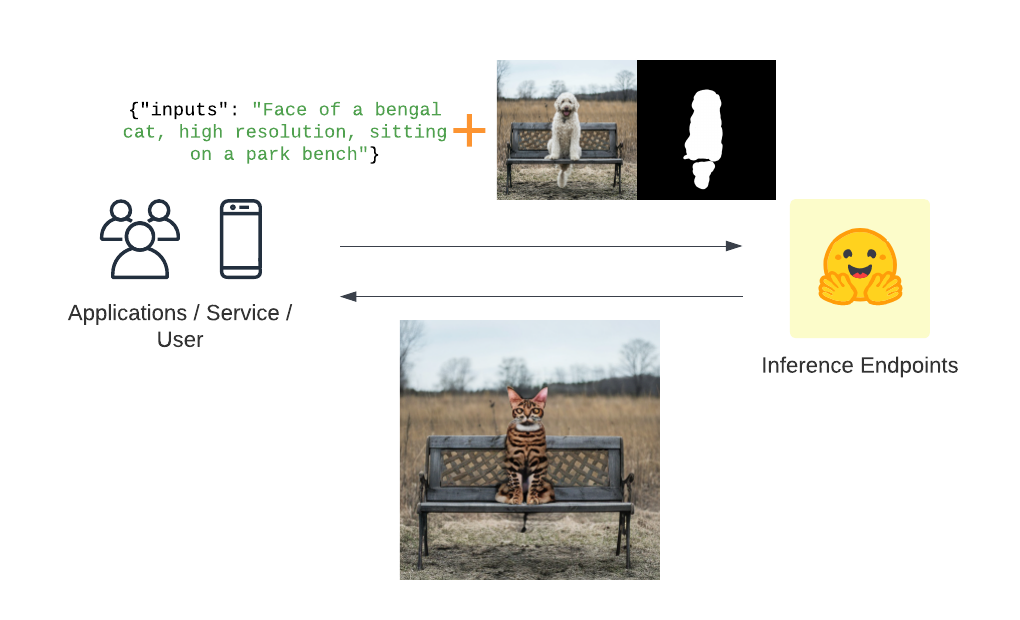

Suppose you are now as impressed as I am. In that case, you are probably asking yourself: “ok, how can I integrate inpainting into my applications in a scalable, reliable, and secure way, how can I use it as an API?”.

That's where Hugging Face Inference Endpoints can help you! 🤗 Inference Endpoints offers a secure production solution to easily deploy Machine Learning models on dedicated and autoscaling infrastructure managed by Hugging Face.

Inference Endpoints already has support for text-to-image generation using Stable Diffusion, which enables you to generate Images from a text prompt. With this blog post, you will learn how to enable inpainting workflows with Inference Endpoints using the custom handler feature. Custom handlers allow users to modify, customize and extend the inference step of your model.

Before we can get started, make sure you meet all of the following requirements:

- An Organization/User with an active credit card. (Add billing here)

- You can access the UI at: https://ui.endpoints.huggingface.co

The Tutorial will cover how to:

- Create Inpainting Inference Handler

- Deploy Stable Diffusion 2 Inpainting as Inference Endpoint

- Integrate Stable Diffusion Inpainting as API and send HTTP requests using Python

TL;DR;

You can directly hit “deploy” on this repository to get started: https://huggingface.co/philschmid/stable-diffusion-2-inpainting-endpoint

1. Create Inpainting Inference Handler

This tutorial is not covering how you create the custom handler for inference. If you want to learn how to create a custom Handler for Inference Endpoints, you can either checkout the documentation or go through “Custom Inference with Hugging Face Inference Endpoints”

We are going to deploy philschmid/stable-diffusion-2-inpainting-endpoint, which implements the following handler.py for stabilityai/stable-diffusion-2-inpainting.

from typing import Dict, List, Any

import torch

from diffusers import DPMSolverMultistepScheduler, StableDiffusionInpaintPipeline

from PIL import Image

import base64

from io import BytesIO

# set device

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

if device.type != 'cuda':

raise ValueError("need to run on GPU")

class EndpointHandler():

def __init__(self, path=""):

# load StableDiffusionInpaintPipeline pipeline

self.pipe = StableDiffusionInpaintPipeline.from_pretrained(path, torch_dtype=torch.float16)

# use DPMSolverMultistepScheduler

self.pipe.scheduler = DPMSolverMultistepScheduler.from_config(self.pipe.scheduler.config)

# move to device

self.pipe = self.pipe.to(device)

def __call__(self, data: Any) -> List[List[Dict[str, float]]]:

"""

:param data: A dictionary contains `inputs` and optional `image` field.

:return: A dictionary with `image` field contains image in base64.

"""

inputs = data.pop("inputs", data)

encoded_image = data.pop("image", None)

encoded_mask_image = data.pop("mask_image", None)

# hyperparamters

num_inference_steps = data.pop("num_inference_steps", 25)

guidance_scale = data.pop("guidance_scale", 7.5)

negative_prompt = data.pop("negative_prompt", None)

height = data.pop("height", None)

width = data.pop("width", None)

# process image

if encoded_image is not None and encoded_mask_image is not None:

image = self.decode_base64_image(encoded_image)

mask_image = self.decode_base64_image(encoded_mask_image)

else:

image = None

mask_image = None

# run inference pipeline

out = self.pipe(inputs,

image=image,

mask_image=mask_image,

num_inference_steps=num_inference_steps,

guidance_scale=guidance_scale,

num_images_per_prompt=1,

negative_prompt=negative_prompt,

height=height,

width=width

)

# return first generate PIL image

return out.images[0]

# helper to decode input image

def decode_base64_image(self, image_string):

base64_image = base64.b64decode(image_string)

buffer = BytesIO(base64_image)

image = Image.open(buffer)

return image2. Deploy Stable Diffusion 2 Inpainting as Inference Endpoint

UI: https://ui.endpoints.huggingface.co/

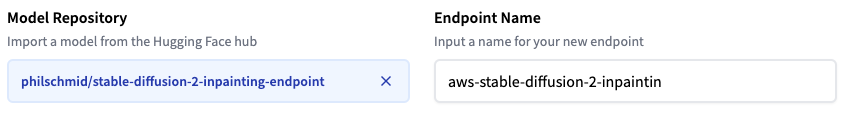

The first step is to deploy our model as an Inference Endpoint. We can deploy our custom Custom Handler the same way as a regular Inference Endpoint.

Select the repository, the cloud, and the region, adjust the instance and security settings, and deploy.

The Inference Endpoint Service will check during the creation of your Endpoint if there is a handler.py available and valid and will use it for serving requests no matter which “Task” you select.

The UI will automatically select the preferred instance type for us after it recognizes the model. We can create you endpoint by clicking on “Create Endpoint”. This will now create our image artifact and then deploy our model, this should take a few minutes.

3. Integrate Stable Diffusion Inpainting as API and send HTTP requests using Python

Hugging Face Inference endpoints can directly work with binary data, this means that we can directly send our image from our document to the endpoint. We are going to use requests to send our requests. (make your you have it installed pip install requests). We need to replace the ENDPOINT_URL and HF_TOKEN with our values and then can send a request. Since we are using it as an API, we need to provide our base image, an image with the mask, and our text prompt.

import json

from typing import List

import requests as r

import base64

from PIL import Image

from io import BytesIO

ENDPOINT_URL = ""

HF_TOKEN = ""

# helper image utilsdef encode_image(image_path):

with open(image_path, "rb") as i:

b64 = base64.b64encode(i.read())

return b64.decode("utf-8")

def predict(prompt, image, mask_image):

image = encode_image(image)

mask_image = encode_image(mask_image)

# prepare sample payload

payload = {"inputs": prompt, "image": image, "mask_image": mask_image}

# headers

headers = {

"Authorization": f"Bearer {HF_TOKEN}",

"Content-Type": "application/json",

"Accept": "image/png"# important to get an image back

}

response = r.post(ENDPOINT_URL, headers=headers, json=payload)

img = Image.open(BytesIO(response.content))

return img

prediction = predict(

prompt="Face of a bengal cat, high resolution, sitting on a park bench",

image="dog.png",

mask_image="mask_dog.png"

)If you want to test an example, you can find the dog.png and mask_dog.png in the repository at: https://huggingface.co/philschmid/stable-diffusion-2-inpainting-endpoint/tree/main

The result of the request should be a PIL image we can display:

Conclusion

We successfully created and deployed a Stable Diffusion Inpainting inference handler to Hugging Face Inference Endpoints in less than 30 minutes.

Having scalable, secure API Endpoints will allow you to move from the experimenting (space) to integrated production workloads, e.g., Javascript Frontend/Desktop App and API Backend.

Now, it's your turn! Sign up and create your custom handler within a few minutes!

Thanks for reading! If you have any questions, feel free to contact me on Twitter or LinkedIn.